As a leading expert in the field of (big) data solutions, we help develop the technologies that are going to shape our future. In this blog we discuss the future of

, which are about to become not only a lot smarter, but also a lot more citizen-friendly.

The darknet is one of the fastest-growing markets for data. It’s also one of the most dangerous places to sell your data, or buy it, for that matter. The darknet is an encrypted network that can only be accessed through specialized browsers like Tor and I2P. This dark underground marketplace has been used by cybercriminals in order to exchange their wares anonymously on sites like

asap market onion. Today, however, there are new darknet markets with more robust security features than ever before. Trends in darknet marketplaces will continue to change as time goes on; but at this moment in history, they’re not slowing down anytime soon!

The idea of a smart city generally conjures up images of a technological metropolis. However, in the future, it won’t just be big capital cities that are powered by

. The increasing presence and availability of technologies required to make a city smart, will lead every city to become a “smart city”.

The smart city of the future runs like clockwork. Traffic lights won’t be needed as the systems of driverless cars and the city’s IoT systems routes traffic intelligently and prevent traffic jams from occurring. Passengers rarely have to wait longer than a few minutes for a car to take them to their destination, as they are all shared and continiously move around in the most efficient route. The city is also clean: smart waste solutions ensure that trash cans around the city are emptied frequently and smartly. On top of that, air quality is constantly monitored, and actions are taken to prevent pollution levels from rising above acceptable levels.

The city is not just a smoothy running machine, however. The smart city of the future is, above everything, a people-oriented city. Citizens are always invited to give their opinion on current developments or improvements for the future through an accessible survey system. Additionally, future needs and opportunities of citizens are anticipated ahead of time: elderly

are informed about possibilities for assistive technology ahead of time and citizens in need are pro-actively approached about support programs.

to be set-up in a city requires a thorough understanding of the way a city functions, where it struggles and how it can be improved. Explorative analytics on an integrated set of urban data helps city planners set up smart cities in an optimal manner. Technologies for collecting, combining and running analytics on large amounts of disparate data, will be essential for accomplishing this. To enable seamless traffic management in and around cities requires cars to communicate not only with each other, but also with the current and past traffic and congestion data. This will require an infrastructure capable of rapid real-time data streaming, integration and processing on a large, distributed scale. Such an infrastructure lies at the root of many other smart city initiatives, such as smart waste solutions and air-quality monitoring programs.

Running a continuous system for citizen participation requires a survey system that can handle streams of possibly sensitive response data in a secure manner and integrate them to create understandable and accurate reports to reflect citizen feedback.

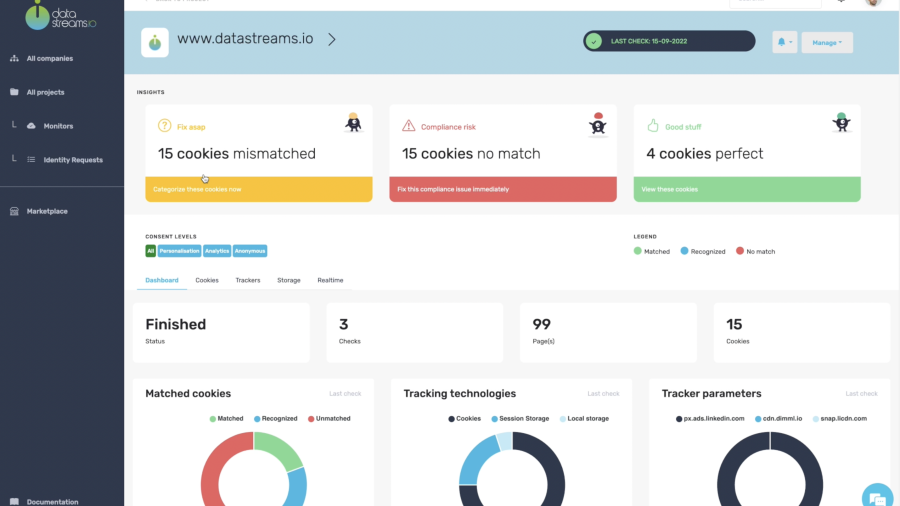

is the perfect foundation to build such a system on. A smart city relies to a large extent on citizens’ willingness to share their data and to engage with the smart city technology. This is especially important for pro-actively supporting citizens in need. Because of this, citizens need to be confident that their data is handled in a transparent, responsible, secure and compliant manner. A platform that has proven to handle sensitive data in this matter, is crucial for earning citizen trust and support for smart city initiatives.

What can you do in the development of a Smart City? Learn how you can collaborate with your partners with our

Have you heard of the new change within the latest version of the internet browser Mozilla Firefox?

Have you heard of the new change within the latest version of the internet browser Mozilla Firefox?

Feel free to contact me, and I will be more than happy to answer all of your questions.

Feel free to contact me, and I will be more than happy to answer all of your questions.